As the data collection methods have extreme influence over the validity of the research outcomes, it is considered as the crucial aspect of the studies

May 2025 | Source: News-Medical

Transcription and open-ended coding play a significant role in qualitative research. These processes transform raw audio or video data into organized actionable data, ultimately revealing additional levels of understanding and recurring themes to be discerned from the text of interviews, focus groups, and surveys. However, two common challenges researchers may face when transcription and coding are overlapping speech and accents. These challenges may impact the transcribing accuracy and the quality of coding, and thus the research findings.

Overlapping speech occurs when two or more speakers speak at the same time or simultaneously. When this happens, it may make identifying individual and/or speaker voices difficult and discern the conversation with one voice presenting audiology input. Accents, like overlapping speech, may result in distorted and/or misinterpreted speech. For example, when surrounding area/the region, culture, and/or language influences are present, accents may misinterpret any speech sound that may be present. Therefore, there are two challenges presented regarding transcription that require caution and respective strategies to accurately record the data and be useful for coding and analysis. This article examines these two challenges and strategies from the author’s personal experiences.

Before delving into the solutions, it’s important to fully understand the nature of these challenges and their impact on the transcription and coding process.

Both of these factors can compromise the integrity of the transcription and coding process, making it essential to adopt strategies to address them effectively.

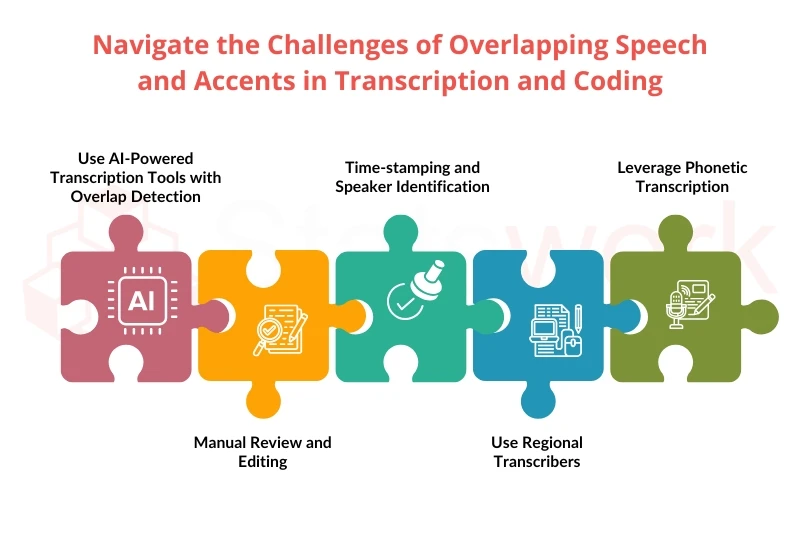

Overlapping speech is one of the most frequently encountered obstacles to transcribing group discussions, interviews, or focus groups. Here are several strategies that will be helpful when faced with this challenge:

Accents present another significant challenge during transcription, as they can affect the accuracy of speech recognition and lead to errors in interpretation. Here’s how to handle accent-related issues:

Once transcription is completed, researchers must analyse the data through open-ended coding. This step involves identifying and categorizing themes, concepts, and emotions within the responses. However, overlapping speech and accents can complicate this process as well.

Transcription and open-ended coding are necessary parts of qualitative research, where sometimes even overlapping speech and accents can add barriers to transcription and coding. By using AI transcription technology, human-in-the-loop review of transcription, and domain knowledge, researchers can overcome these barriers to produce high quality, usable data for analysis.

Proper handling of overlapping speech and accents allows the context of the conversation to be maintained and won’t bias or compromise the coding process of the conversation. Through effective use of tools, processes and methods, researchers can extract rich, actionable insights from qualitative data – even when we encounter the challenges of transcription like these.

Unlock Accurate Insights from Your Data!

Overcome transcription challenges with Statswork—expert transcription, precise coding, and seamless analysis.

6. Miller, R., & Lee, J. (2023). Evaluating the effectiveness of accent adaptation techniques on the accuracy of Vosk speech recognition systems across diverse English dialects. ResearchGate. https://www.researchgate.net

WhatsApp us