As the data collection methods have extreme influence over the validity of the research outcomes, it is considered as the crucial aspect of the studies

May 2025 | Source: News-Medical

With organizations entering the world of AI agents at an incredible pace, there is a growing recognition that human expertise is essential to ensuring these agents produce reliable, accurate, and trustworthy results. As we encounter stakeholders at Statswork, be they clients or readers, they want assurances along with automation. Organizations need assurances that their solutions are being robustly evaluated and improved upon based on continuous cycles of technology and human experience. [1]

Human-in-the-Loop (HITL) is important for agent evaluation because AI agents, even with the advances in accuracy, will occasionally produce minor errors, mishaps, misinterpretations, or inevitably a loss of context which cannot reliably be captured with automated metrics. With HITL there is human oversight in the QA and development phases, and we verify accuracy, relevance, clarity, and consistency of agent outputs and expected outputs. [1][2]

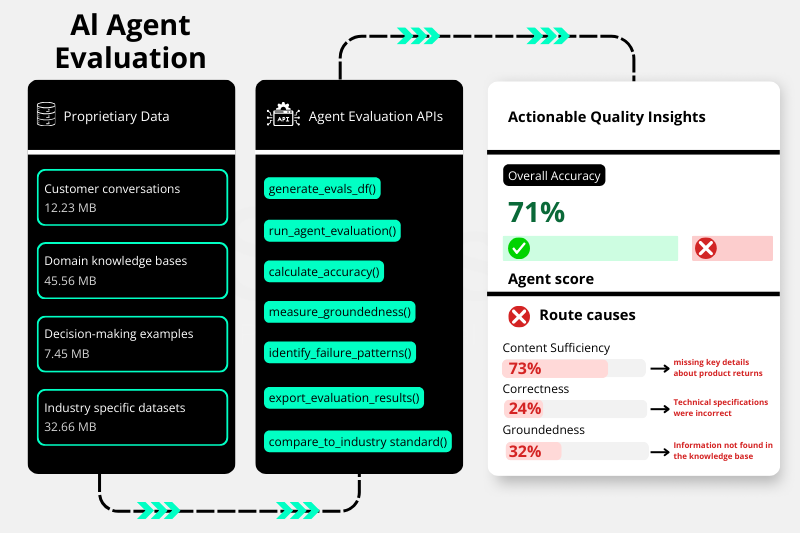

Modern appraisal of agents has brought with it not just code review or software algorithms. Agent evaluation is inclusive of:

These metrics are not just reinforced through the review of final agent output, but by auditing the intermediate decisions and interactions referenced in the Objectways categories described previously, what they called “the agent’s trajectory” which affords visibility into how and why agents reach conclusions.[4] [5]

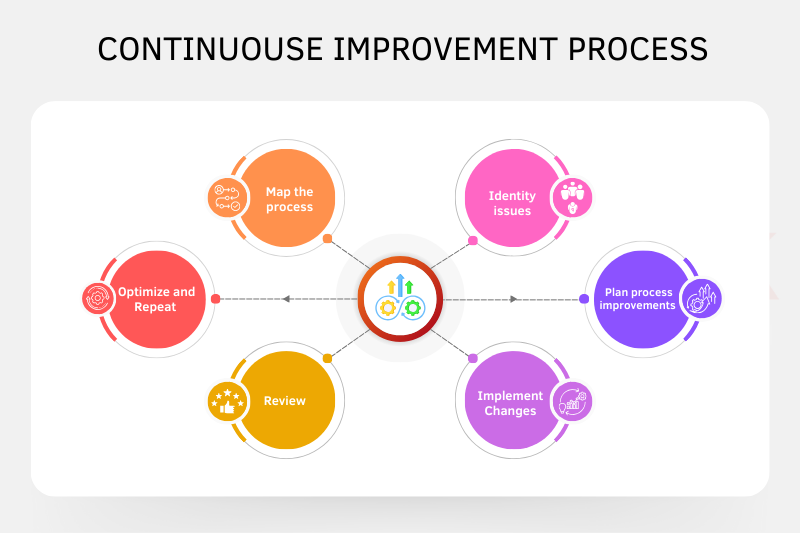

At Statswork, evaluation of agents isn’t a single task; it’s part of an ongoing process. This cycle looks like:

Tensor Act Studio and other tools allow you to create a scalable HITL process, and provide your organization with:

For example, imagine someone who is an agent of requests for statistical consultations. One client asked for help with more complex analysis for a clinical research project.

This process guarantees that agent outputs are not just automated but assured—they are rigorously reviewed and continually developed.

User Query Input

↓

AI Agent Generates Response

↓

Human Review & Validation

↓

Flag Issues & Provide Feedback

↓

Data Collection for Model Retraining

↓

Continuous Model Improvement

↓ (loops back to AI Agent Generates Response)

Scaling HITL systems can be difficult. Active learning is vital to help balance reviewer workloads: systems like Tensor Act Studio only send ambiguous cases to humans, based on uncertainty flags. This is productive use of resources, while maintaining agent quality.

It is essential to have bias detection. Statswork human reviewers use audits of agent outputs for fairness. They leverage structured frameworks, while also utilizing explainability tools to ensure that recommendations are fair and trustworthy.

Statswork’s priority of ethical AI is exhibited within their focus on bias detection. For all agent decisions a human reviewer assesses subjective bias; whether it is statistical, demographic or contextual, they utilize structured fairness auditing and explainability frameworks. This allows for the assurances that these recommendations are trustworthy and fair for everyone.

The Future with Statswork

As AI agents are evolving, Statswork’s HITL-centric evaluation method will lead the way in excellence standards in accuracy, relevance, clarity, and consistency. Statswork uses Tensor Act Studio, alongside automated and human intelligence, to enable organizations to deploy AI uses that are not only fast but also responsible and in alignment to their business objectives.

In conclusion, Statswork’s HITL-centric agent evaluation focuses on quality assurance, bias detection, contextualization and continuous improvement which enables organizations to leverage AI agents that are not only fast and scalable – but reliable, nuanced and in alignment to their business needs. [1] [2]

Statswork Delivers Quality Assurance for AI Agents

With Statswork’s HITL-centric approach, your AI will be evaluated for accuracy, relevance, and consistency. Contact us to learn how we can help.

WhatsApp us